[ad_1]

Katherine Cross, who researches online harassment at the University of Washington, says that when virtual reality is immersive and real, so is the toxic behavior that occurs in that environment. “At the end of the day, the nature of virtual reality spaces is designed to trick the user into thinking that they are physically in a certain space and that their every bodily action is taking place in a 3D environment,” he says. . “This is one reason why emotional responses can be stronger in this area and why VR triggers the same internal nervous system and psychological responses.”

That was true of the woman groping Horizon Worlds. According to The Verge, his post reads: “Sexual harassment is no joke on the regular internet, but being in VR adds another layer that makes it all the more intense. This behavior that made me feel alone in the Plaza last night, not just groping,” wrote The Verge. There were other supporters as well. [the virtual environment’s central gathering space]”

Sexual assault and harassment in virtual worlds is neither new nor it is unrealistic to expect a world where these problems will disappear completely. As long as there are people who will hide behind computer screens to escape moral responsibility, they will continue to be.

Perhaps the real problem has to do with the perception that when you play a game or join a virtual world, it’s something Stanton describes as “a contract between the developer and the player.” “As a player, I agree to be able to do whatever I want in the world of developers by their rules,” he says. “But this contract is broken and I don’t feel comfortable anymore, the company’s obligation is to get the player back where they want and to their comfort.”

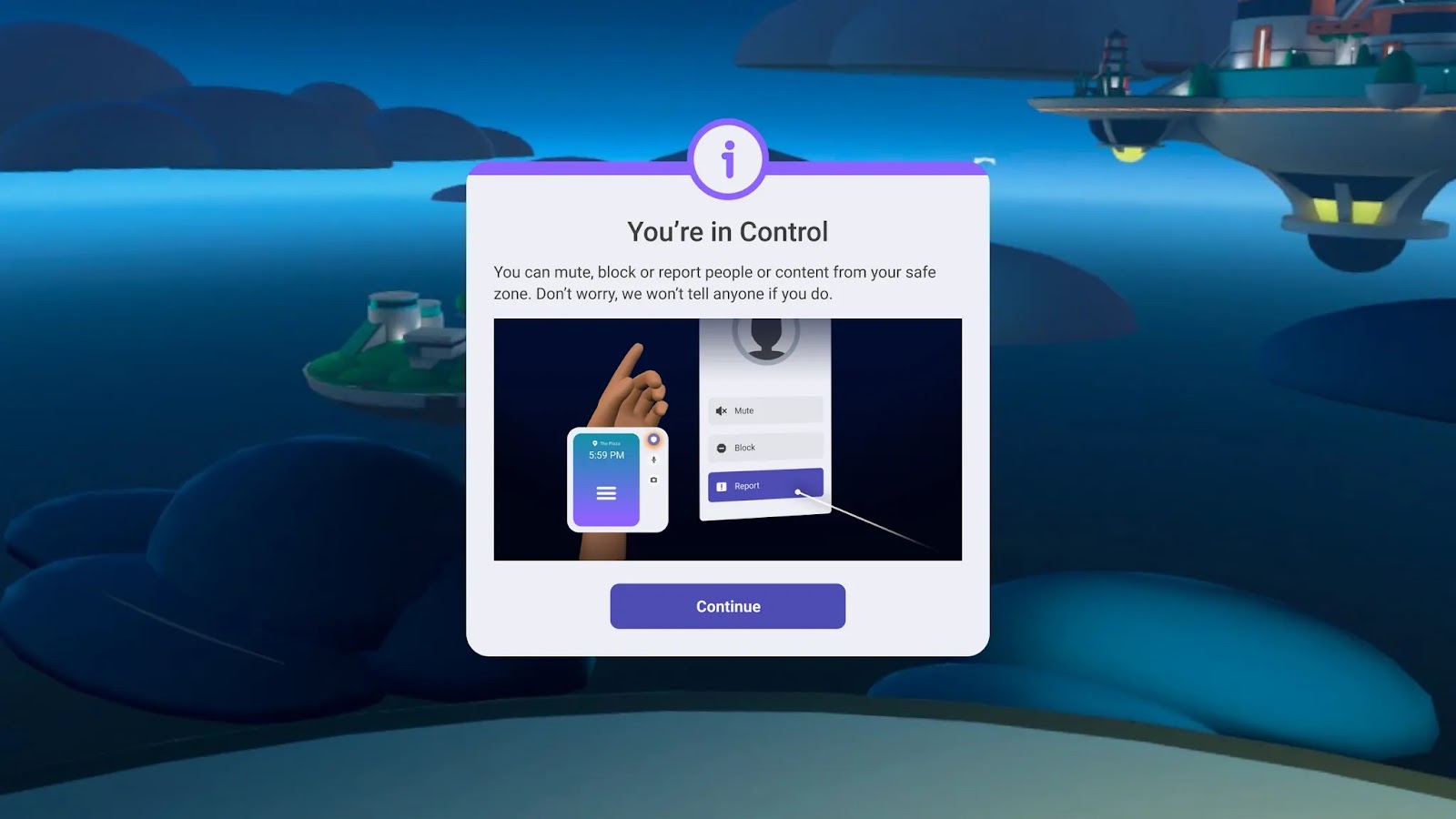

The question is: whose responsibility is it to ensure users’ comfort? Meta, for example, says it gives users access to tools to keep them safe and effectively shifts the load to them.

“We want everyone at Horizon Worlds to have a positive experience with easy-to-find security tools and it’s never the user’s fault that they don’t use all the features we offer,” said Meta spokesperson Kristina Milian. “We will continue to improve the UI and better understand how people use our tools so users can report things easily and reliably. Our goal is to make Horizon Worlds secure and we are committed to doing it.”

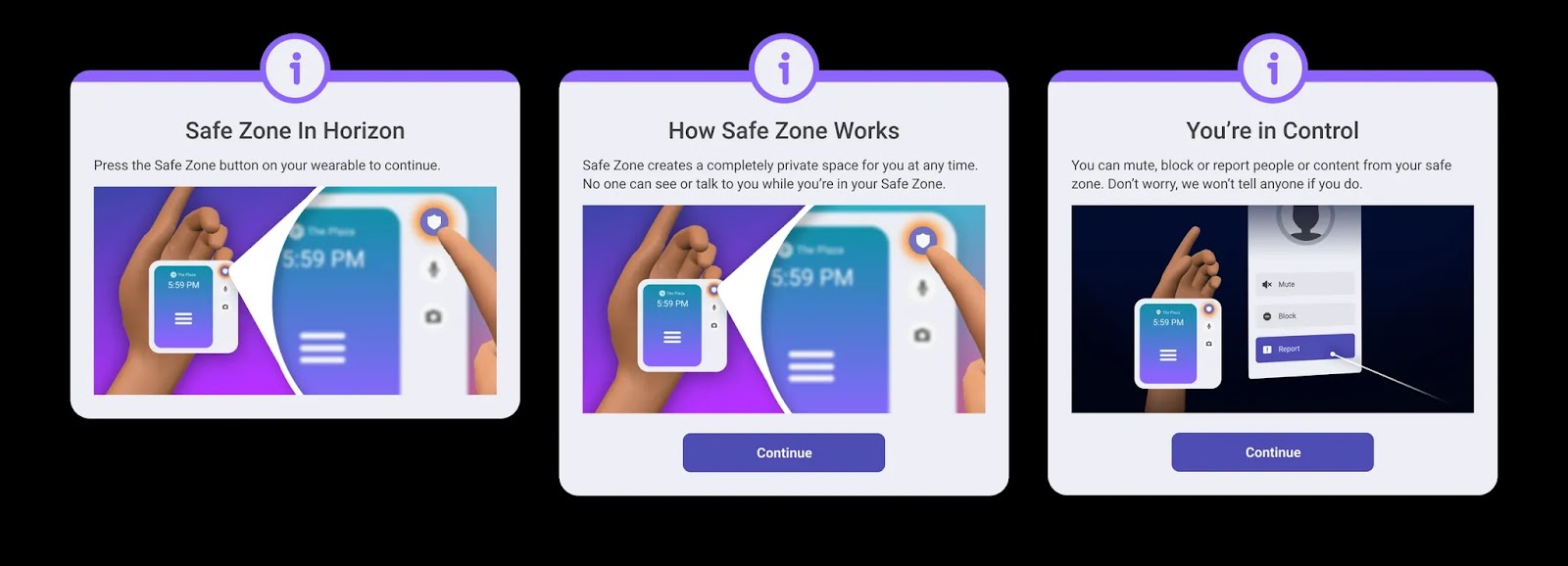

Milian said users must go through an onboarding process that teaches them how to start the Safe Zone before joining Horizon Worlds. He also said regular reminders are uploaded to screens and posters at Horizon Worlds.

However, the fact that the Meta groping victim does not intend to use or access the Safe Zone, openly trouble, says Cross. “The structural question is the big one for me,” he says. “Broadly speaking, when companies tackle online abuse, their solution is to outsource it to the user and say, ‘Here we give you the power to take care of it for yourself.

And that’s unfair and it doesn’t work. Security should be easy and accessible, and there are many ideas to make it possible. According to Stanton, some kind of universal signal in virtual reality—perhaps Quivr’s V gesture—could tell moderators that something is wrong. Fox wonders if an automatic personal distance would help unless the two people mutually agree to be closer. And Cross believes it’s helpful to make clear the norms for training sessions that reflect those that apply in everyday life: “In the real world, you don’t just randomly grope for someone, and you have to move that into the virtual world.”

Until we understand whose job it is to protect users, an important step towards a safer virtual world is to discipline attackers, who are often free and eligible to participate online even after their behavior is exposed. “We need deterrents,” Fox says. This means making sure bad actors are found and suspended or banned. (Milian Meta said “[doesn’t] share details on individual cases when asked what happened to the alleged abuser.)

Stanton regrets that he didn’t push harder for industry-wide adoption of the power movement and didn’t talk more about Belamire’s groping case. “It was a lost opportunity,” he says. “We could have avoided that incident in Meta.”

One thing is clear: there is no agency that is expressly responsible for the rights and safety of those who participate anywhere online, let alone virtual worlds. Until things change, the metaverse will remain a dangerous and problematic area.

[ad_2]

Source link