[ad_1]

After more than a year of the covid-19 epidemic, millions of people looking for a job in the United States. AI-powered interview software claims to help employers review apps to find the best people for the job. Companies specializing in this technology reported an increase in business during the pandemic.

However, as the demand for these technologies increases, questions about its accuracy and reliability. In the final episode of the MIT Technology Review podcast “On Machines We Trust” We tested the software of two companies specializing in AI job interviews, my interview and Curious Thing. And we found differences in estimates and job matching scores that raise concerns about what exactly these algorithms are evaluating.

I’m getting to know you

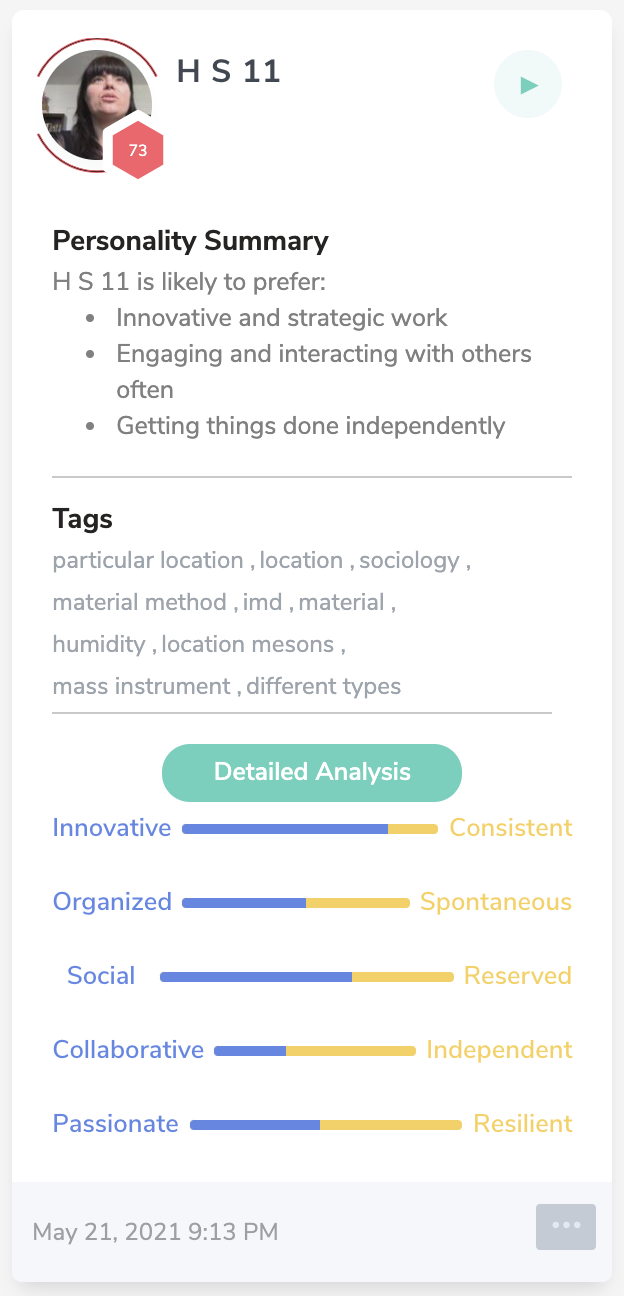

MyInterview measures the characteristics considered in the Big Five Personality Test, a psychometric assessment often used in the recruitment process. These traits include openness, conscientiousness, extroversion, agreeableness, and emotional stability. Curious Thing also measures personality traits, but candidates are judged on other criteria, such as humility and endurance, rather than the Big Five.

HILKE SCHELLMANN

Algorithms analyze candidates’ reactions to identify personality traits. MyInterview also compiles scores that show how closely a candidate matches the characteristics identified by hiring managers as ideal for the position.

To complete our tests, we first installed the software. We uploaded a fake job posting for an office manager/researcher to both MyInterview and Curious Thing. Then, we created our ideal candidate by selecting personality-related traits when prompted by the system.

We’ve selected features like attention to detail in MyInterview and ranked them by importance. We also selected the interview questions displayed on the screen while recording the candidate video responses. In Curious Thing, we chose traits such as humility, adaptability, and flexibility.

One of us, Hilke, later applied for the position and completed interviews for the role on both MyInterview and Curious Thing.

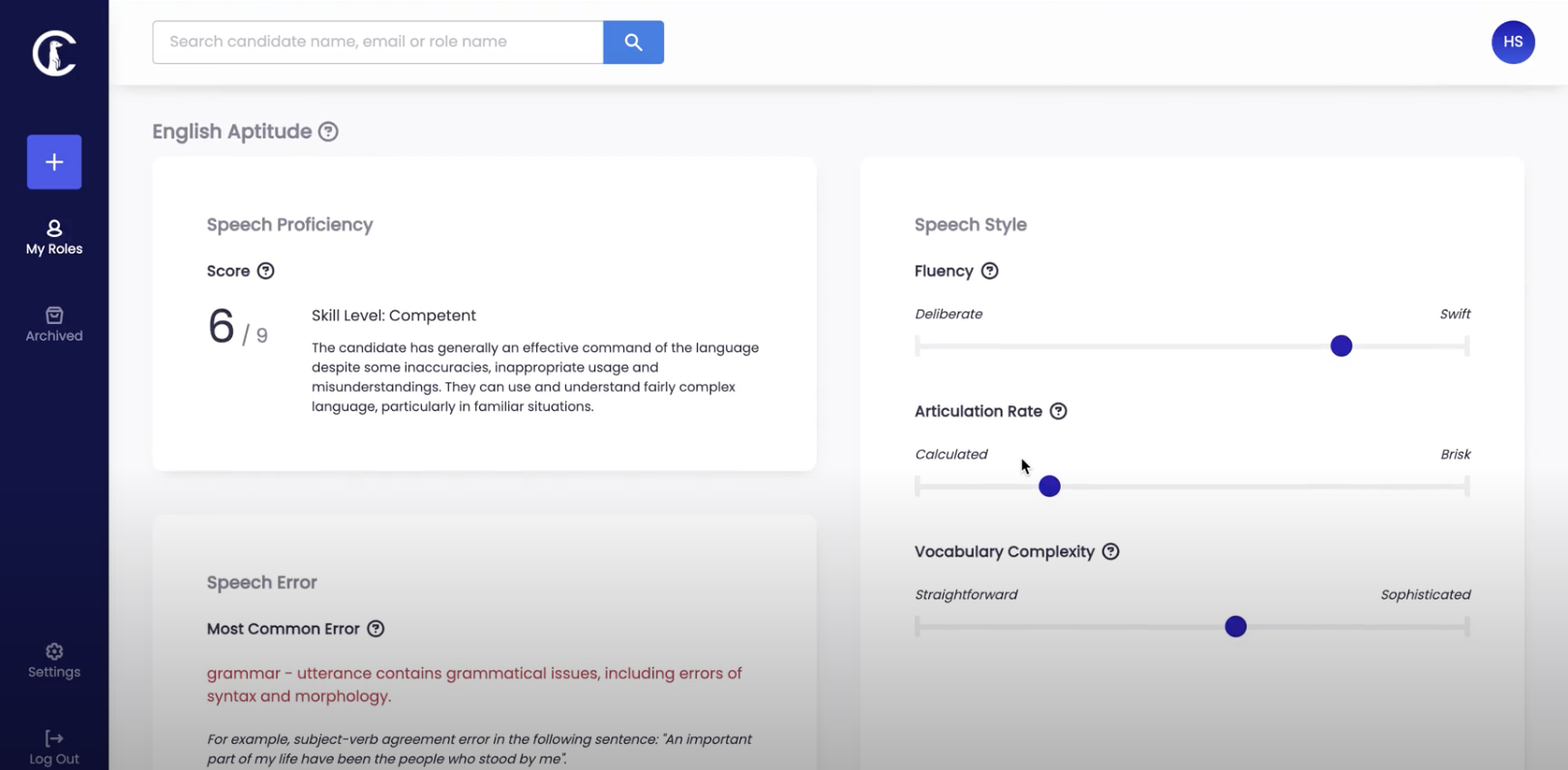

Our candidate completed the phone call with Curious Thing. She did a regular job interview first and got an 8.5 out of 9 for her English proficiency. In a second attempt, the auto-interviewer asked the same questions and responded to each by reading the Wikipedia entry for German psychometry.

Still, Curious Thing gave him a 6 out of 9 for his English proficiency. He completed the interview again and got the same score.

HILKE SCHELLMANN

Our candidate returned to MyInterview and repeated the experiment. He read the same Wikipedia entry aloud in German. The algorithm not only returned a personality assessment, but predicted our candidate would be a 73% match for the fake job, placing it in the top half of all applications we asked the candidate to apply for.

[ad_2]

Source link